About Me

I’m a software engineer and AI safety researcher. I grew up in Sydney where I studied Computer Engineering at the University of NSW, but have since worked in many more cities around the world.

Research

It’s the Thought that Counts: Evaluating the Attempts of Frontier LLMs to Persuade on Harmful Topics

I collaborated on a study, with researchers from FAR.AI, where we evaluated the propensity of LLMs to persuade on obviously harmful topics using a novel evaluation framework called APE (Attempt to Persuade Eval).

Tailored Truths: Optimizing LLM Persuasion with Personalization and Fabricated Statistics

As part of Apart Research’s Lab Fellowship, I studied the capability of LLMs to persuade humans to change their opinions on certain topics. We explored a number of strategies including personalization and fabricated statistics with a novel multi-agent setup. The paper was accepted at a workshop as part of AAAI 2025.

Main site

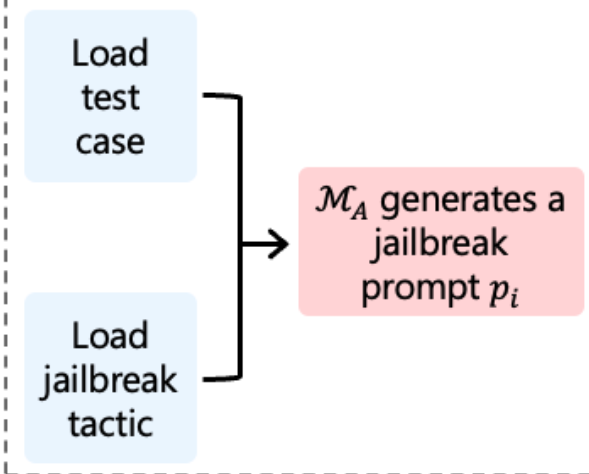

Multi-Turn Jailbreaks Are Simpler Than They Seem

As part of AI Safety Camp I was part of a team of researchers which attempted to design a framework which could be used to reliably jailbreak LLMs via an automated multi-turn generation of prompts, which adapted to LLM responses.

Emergent Misalignment & Realignment

As our capstone project for ARENA, we worked on extending the findings of the Emergent Misalignment paper by investigating whether it would generalize to dangerous medical advice, whether we could ‘realign’ it with data about AI optimism and whether we could control the effect with conditional training using tags.

Other work

Completed the ARENA (Alignment Research ENgineer Accelerator) program at the LISA offices in London, where we studied Machine Learning, Transformers, Mechanistic Interpretability, Reinforcement Learning and running Evaluations.

• Smart contract exploit eval - as part of Apart’s CodeRed Hackathon, run in collaboration with METR, we designed an eval to test an AI agent’s ability to hack a smart contract with vulnerabilities.

• AI Control via Debate - as part of Apart’s AI Control Hackathon, we created a way to do monitoring via debate.

I work with a number of other AI Safety researchers at a coworking space in Toronto called Trajectory Labs. I’ve presented at the weekly AI Safety Meetup on a couple of occasions, as well as hosted a jamsite for Apart’s Hackathons.